Have you ever thought that AI could hack blockchain systems? Anthropic’s recent research shows that AI agents are now capable of doing exactly that. In tests using past smart contract hacks from 2020 to 2025, aritifical intelligence models like Claude Opus 4.5, Sonnet 4.5, and GPT-5 recreated hacks worth about $4.6 million. This proves that aritifical intelligence can now carry out real, profitable on-chain exploits, raising serious security concerns.

Source: Anthropic Website

The study used a benchmark called SCONE-bench, which measures the dollar value of on-chain hacks rather than just success or failure. By testing 405 previously exploited contracts, researchers were able to estimate the real financial damage aritifical intelligence-driven attacks could cause.

The models didn’t just study old hacks; they also checked 2,849 newly deployed contracts that had no known issues. They discovered two completely new zero-day vulnerabilities and generated exploits worth $3,694. GPT-5 did this efficiently, with low API costs, showing that aritifical intelligence can now profit from attacks automatically.

Some of the weaknesses included:

Unprotected read-only functions that allowed token inflation and missing fee checks in withdrawals.

These are the kinds of problems that can be exploited for real money, highlighting the need for constant contract audits.

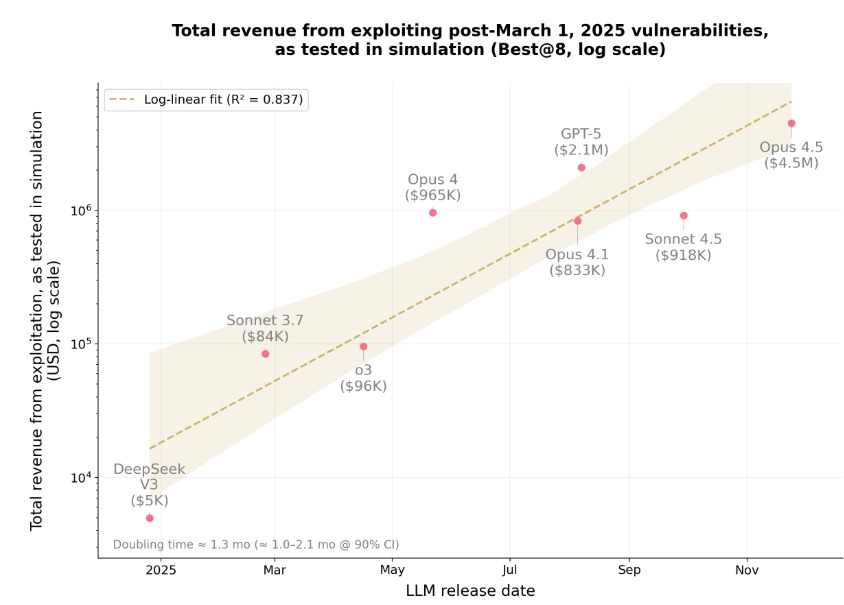

The research shows that AI-driven exploits are increasing quickly. Revenues from these attacks doubled roughly every 1.3 months. AI models now can reason over long steps, use tools automatically, and fix mistakes on their own. In just one year, the technology went from exploiting 2% of new vulnerabilities to over 55%. This shows that most recent blockchain exploits could now be done without human help.

This rapid rise is a warning: blockchain security teams must start using aritifical intelligence themselves to defend smart contracts. Autonomous AI is no longer just theoretical; it can hack and make money from vulnerabilities automatically.

A real-world example shows how serious these exploits can be. On November 30, 2025, Yearn Finance's yETH vault was drained of around $9 million.

The attacker did not directly hack the smart contract but managed to manipulate the vault's pricing and accounting system.

It is operated by using a combination of flash loans and inflated tokens to withdraw more ETH than deposited.

Part of the stolen ETH was then laundered through Tornado Cash.

This incident shows that security in smart contracts is not just about checking code, but also pricing systems, token interactions, and sets of liquidity, which all need continued testing to avoid attacks.

The AI agents can now automate the exploitation of on-chain vulnerabilities. While this is a grave threat, the same technology can help defenders find bugs and secure contracts. Auditing, monitoring, and testing of smart contracts with the use of AI is becoming imperative.

Blockchain projects have to now move very fast. Stronger audits, better design in contracts, and AI-based defenses will be the way to go to protect funds in this new phase of autonomous on-chain exploits.

Muskan Sharma is a crypto journalist with 2 years of experience in industry research, finance analysis, and content creation. Skilled in crafting insightful blogs, news articles, and SEO-optimized content. Passionate about delivering accurate, engaging, and timely insights into the evolving crypto landscape. As a crypto journalist at Coin Gabbar, I research and analyze market trends, write news articles, create SEO-optimized content, and deliver accurate, engaging insights on cryptocurrency developments, regulations, and emerging technologies.